Docker部署logstash,同步MySQL

发布日期:2021-05-08 06:00:09

浏览次数:26

分类:原创文章

本文共 9938 字,大约阅读时间需要 33 分钟。

文章目录

目录

前言

师弟购买了某教程,没有服务器,所依额外建立一个服务给他学习,以此记录!

版本说明

docker-compose version 1.26.2

logstash:6.8.12

elasticsearch:6.8.12

elasticsearch-head:6

结构

|-- config| |-- jvm.options # jvm配置| `-- logstash.yml`-- pipeline |-- foodie-items.sql # 额外存储为了解耦 |-- logstash-test.conf # logstash配置 |-- mysql-connector-java-5.1.41.jar # MySQL驱动 (自己准备) `-- pipelines.yml # 渠道一、准备配置文件

1.1、创建挂载目录

mkdir -p /opt/logstashsync/{ config,pipeline}1.2、jvm.options

[root@eddie config]# cat jvm.options## JVM configuration# Xms represents the initial size of total heap space# Xmx represents the maximum size of total heap space# df #-Xms1g#-Xmx1g-Xms512m-Xmx512m################################################################## Expert settings#################################################################### All settings below this section are considered## expert settings. Don't tamper with them unless## you understand what you are doing#################################################################### GC configuration-XX:+UseConcMarkSweepGC-XX:CMSInitiatingOccupancyFraction=75-XX:+UseCMSInitiatingOccupancyOnly## Locale# Set the locale language#-Duser.language=en# Set the locale country#-Duser.country=US# Set the locale variant, if any#-Duser.variant=## basic# set the I/O temp directory#-Djava.io.tmpdir=$HOME# set to headless, just in case-Djava.awt.headless=true# ensure UTF-8 encoding by default (e.g. filenames)-Dfile.encoding=UTF-8# use our provided JNA always versus the system one#-Djna.nosys=true# Turn on JRuby invokedynamic-Djruby.compile.invokedynamic=true# Force Compilation-Djruby.jit.threshold=0# Make sure joni regexp interruptability is enabled-Djruby.regexp.interruptible=true## heap dumps# generate a heap dump when an allocation from the Java heap fails# heap dumps are created in the working directory of the JVM-XX:+HeapDumpOnOutOfMemoryError# specify an alternative path for heap dumps# ensure the directory exists and has sufficient space#-XX:HeapDumpPath=${ LOGSTASH_HOME}/heapdump.hprof## GC logging#-XX:+PrintGCDetails#-XX:+PrintGCTimeStamps#-XX:+PrintGCDateStamps#-XX:+PrintClassHistogram#-XX:+PrintTenuringDistribution#-XX:+PrintGCApplicationStoppedTime# log GC status to a file with time stamps# ensure the directory exists#-Xloggc:${ LS_GC_LOG_FILE}# Entropy source for randomness-Djava.security.egd=file:/dev/urandom1.2、logstash.yml

[root@eddie config]# cat logstash.yml config: reload: automatic: true interval: 3sxpack: management.enabled: false monitoring.enabled: false#path.config: /usr/share/logstash/config/conf.d/*.conf#path.logs: /usr/share/logstash/logs#以下配置能在kibana查看logstash状态#xpack.monitoring.enabled: true #xpack.monitoring.elasticsearch.username: "logstash46"#xpack.monitoring.elasticsearch.password: "123456"#xpack.monitoring.elasticsearch.hosts: ["http://xx.xx.xx.xx:9200"]1.3、pipelines.yml

[root@eddie pipeline]# cat pipelines.yml - pipeline.id: logstash-test pipeline.workers: 2 pipeline.batch.size: 8000 pipeline.batch.delay: 10 path.config: "/usr/share/logstash/pipeline/logstash-test.conf"1.4、logstash-test.conf

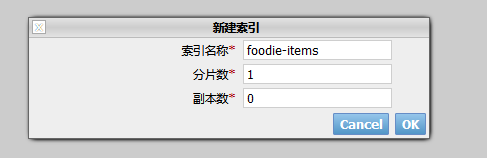

[root@eddiepipeline]# cat logstash-test.confinput { jdbc { # 设置 MySql/MariaDB 数据库url以及数据库名称 jdbc_connection_string => "jdbc:mysql://127.0.0.1:3306/foodie-shop?useUnicode=true&characterEncoding=UTF-8&autoReconnect=true" # 用户名和密码 jdbc_user => "root" jdbc_password => "123456" # 数据库驱动所在位置,可以是绝对路径或者相对路径 jdbc_driver_library => "/usr/share/logstash/pipeline/mysql-connector-java-5.1.41.jar" # 驱动类名 jdbc_driver_class => "com.mysql.jdbc.Driver" # 开启分页 jdbc_paging_enabled => "true" # 分页每页数量,可以自定义 jdbc_page_size => "1000" # 执行的sql文件路径 (可直接写语句 statement => select * from table t1 where name = 'eddie') statement_filepath => "/usr/share/logstash/pipeline/foodie-items.sql" # 设置定时任务间隔 含义:分、时、天、月、年,全部为*默认含义为每分钟跑一次任务 schedule => "* * * * *" # 索引类型 type => "_doc" # 是否开启记录上次追踪的结果,也就是上次更新的时间,这个会记录到 last_run_metadata_path 的文件 use_column_value => true # 记录上一次追踪的结果值 last_run_metadata_path => "/usr/share/logstash/pipeline/track_time" # 如果 use_column_value 为true, 配置本参数,追踪的 column 名,可以是自增id或者时间 tracking_column => "updated_time" # tracking_column 对应字段的类型 tracking_column_type => "timestamp" # 是否清除 last_run_metadata_path 的记录,true则每次都从头开始查询所有的数据库记录 clean_run => false # 数据库字段名称大写转小写 lowercase_column_names => false }}output { elasticsearch { # es地址 hosts => ["xx.xx.xx.xx:9200"] # 同步的索引名 index => "foodie-items" # 设置_docID和数据相同 document_id => "%{id}" # document_id => "%{itemId}" } # 日志输出 stdout { codec => json_lines }}1.5、elasticsearch-head 创建索引

二、准备数据库

2.1、DDL信息

CREATE TABLE `items` ( `id` varchar(64) NOT NULL COMMENT '商品主键id', `item_name` varchar(32) NOT NULL COMMENT '商品名称 商品名称', `cat_id` int(11) NOT NULL COMMENT '分类外键id 分类id', `root_cat_id` int(11) NOT NULL COMMENT '一级分类外键id', `sell_counts` int(11) NOT NULL COMMENT '累计销售 累计销售', `on_off_status` int(11) NOT NULL COMMENT '上下架状态 上下架状态,1:上架 2:下架', `content` text NOT NULL COMMENT '商品内容 商品内容', `created_time` datetime NOT NULL COMMENT '创建时间', `updated_time` datetime NOT NULL COMMENT '更新时间', PRIMARY KEY (`id`)) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COMMENT='商品表 商品信息相关表:分类表,商品图片表,商品规格表,商品参数表'CREATE TABLE `items_img` ( `id` varchar(64) NOT NULL COMMENT '图片主键', `item_id` varchar(64) NOT NULL COMMENT '商品外键id 商品外键id', `url` varchar(128) NOT NULL COMMENT '图片地址 图片地址', `sort` int(11) NOT NULL COMMENT '顺序 图片顺序,从小到大', `is_main` int(11) NOT NULL COMMENT '是否主图 是否主图,1:是,0:否', `created_time` datetime NOT NULL COMMENT '创建时间', `updated_time` datetime NOT NULL COMMENT '更新时间', PRIMARY KEY (`id`)) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COMMENT='商品图片 '2.2、foodie-items.sql

[root@eddie pipeline]# cat foodie-items.sql select i.id as itemId, i.item_name as itemName, i.sell_counts as sellCounts, ii.url as imgUrl, tempSpec.price_discount as price, i.updated_time as updated_timefrom items ileft join items_img iion i.id = ii.item_idleft join (select item_id,min(price_discount) as price_discount from items_spec group by item_id) tempSpecon i.id = tempSpec.item_idwhere ii.is_main = 1 and i.updated_time >= :sql_last_valuei.updated_time >= :sql_last_value 大于等于更新时间,才会同步到es

三、容器方式启动

3.1.1、直接运行方式

docker run -d \-v /opt/logstashsync/config/logstash.yml:/usr/share/logstash/config/logstash.yml \-v /opt/logstashsync/config/jvm.options:/usr/share/logstash/config/jvm.options \-v /opt/logstashsync/pipeline/logstash-test.conf:/usr/share/logstash/pipeline/logstash-test.conf \-v /opt/logstashsync/pipeline/pipelines.yml:/usr/share/logstash/pipeline/pipelines.yml \-v /opt/logstashsync/pipeline/mysql-connector-java-5.1.41.jar:/opt/logstashsync/pipeline/mysql-connector-java-5.1.41.jar\--name=logstash \docker.elastic.co/logstash/logstash:6.8.123.1.2、拷贝语句文件

docker cp /opt/logstashsync/pipeline/foodie-items.sql logstash:/usr/share/logstash/pipeline/3.1.3、进入容器运行logstash

docker exec -it logstash bash /usr/share/logstash/bin/./logstash -f /usr/share/logstash/pipeline/logstash-test.conf --path.data=/usr/share/logstash/pipeline/data3.2.1、使用 docker-compose

[root@eddie pipeline]# vim docker-compose.yml#author eddie#blog https://blog.eddilee.cn/version: "3"services: logstash: image: docker.elastic.co/logstash/logstash:6.8.12 privileged=true restart: "always" ports: - 5044:5044 - 9600:9600 container_name: logstash volumes: - /opt/logstashsync/config/logstash.yml:/usr/share/logstash/config/logstash.yml - /opt/logstashsync/config/jvm.options:/usr/share/logstash/config/jvm.options - /opt/logstashsync/pipeline/logstash-test.conf:/usr/share/logstash/pipeline/logstash-test.conf - /opt/logstashsync/pipeline/pipelines.yml:/usr/share/logstash/pipeline/pipelines.yml - /opt/logstashsync/pipeline/mysql-connector-java-5.1.41.jar:/usr/share/logstash/pipeline/mysql-connector-java-5.1.41.jar3.2.2、运行指令

# 启动容器docker-compose up -d # 查看容器docker-compose ps# 删除容器docker-compose down -v # 进入容器docker exec -it logstash bash # 查看日志docker-compose logs -f# 运行logstash同步es/usr/share/logstash/bin/./logstash -f /usr/share/logstash/pipeline/logstash-test.conf --path.data=/usr/share/logstash/pipeline/data# -t 是检测文件的正确与否的选项/usr/share/logstash/bin/./logstash -f /usr/share/logstash/pipeline/logstash-test.conf -t --path.data=/usr/share/logstash/pipeline/data# 后台执行同步esnohup /usr/share/logstash/bin/./logstash -f /usr/share/logstash/pipeline/logstash-test.conf --path.data=/usr/share/logstash/pipeline/data &直接映射文件夹,会因用户组权限问题。导致失效等问题,可以通过 --privileged=true 解决或者直接映射文件

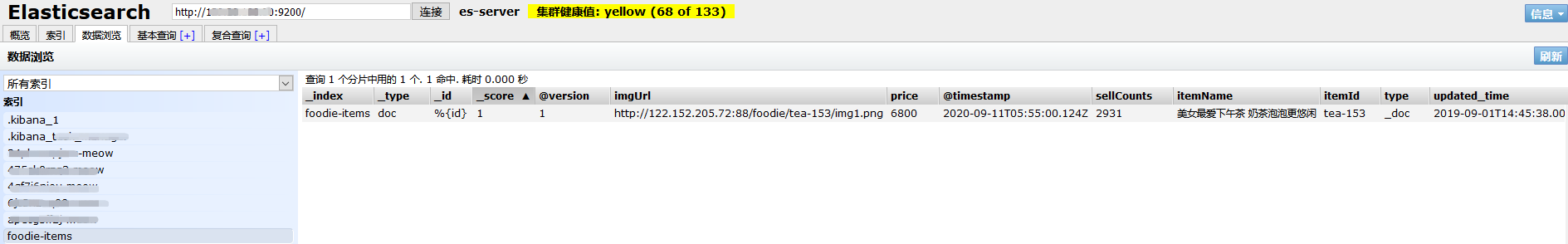

四、效果图

五、低配置服务器优化

#author eddie#blog https://blog.eddilee.cn/version: "3"services: logstash: image: docker.elastic.co/logstash/logstash:6.8.12 restart: "always" ports: - 5044:5044 - 9600:9600 deploy: resources: limits: cpus: '0.5' memory: 150m# WARNING: The following deploy sub-keys are not supported in compatibility mode and have been ignored: resources.reservations.cpus# reservations:# cpus: '0.5'# memory: 128M container_name: logstash volumes: - /opt/logstashsync/config/logstash.yml:/usr/share/logstash/config/logstash.yml - /opt/logstashsync/config/jvm.options:/usr/share/logstash/config/jvm.options - /opt/logstashsync/pipeline/logstash-test.conf:/usr/share/logstash/pipeline/logstash-test.conf - /opt/logstashsync/pipeline/pipelines.yml:/usr/share/logstash/pipeline/pipelines.yml - /opt/logstashsync/pipeline/mysql-connector-java-5.1.41.jar:/usr/share/logstash/pipeline/mysql-connector-java-5.1.41.jardocker-compose --compatibility up -d

发表评论

最新留言

哈哈,博客排版真的漂亮呢~

[***.90.31.176]2025年04月11日 15时18分42秒

关于作者

喝酒易醉,品茶养心,人生如梦,品茶悟道,何以解忧?唯有杜康!

-- 愿君每日到此一游!

推荐文章

Android低级错误踩坑之Application

2021-05-08

android自定义无边框无标题的DialogFragment替代dialog

2021-05-08

获取android的所有挂载路径(转)

2021-05-08

记录一下写的一个java生成不带重复数的随机数组(算法没有详细设计,只实现功能)

2021-05-08

androidstudio同步的时候下载jcenter的库出错解决办法

2021-05-08

ButterKnife使用问题

2021-05-08

React学习笔记(一)

2021-05-08

低代码平台快速开发小程序

2021-05-08

vue学习笔记

2021-05-08

低代码后续发展路线图

2021-05-08

MobX 学习 - 04 TodoList 案例

2021-05-08

MobX 学习 - 06 异步任务、rootStore、数据监测

2021-05-08

react: antd 中 table 排序问题

2021-05-08

FPGA学习网站推荐

2021-05-08

LeetCode:100. Same Tree相同的树(C语言)

2021-05-08

【个人网站搭建】GitHub pages+hexo框架下为next主题添加分类及标签

2021-05-08

GDB命令—jump/return/call/disassemble

2021-05-08

java基础--继承

2021-05-08

java基础--java内部类

2021-05-08