Django ORM与Scrapy集成

发布日期:2021-07-01 05:19:53

浏览次数:2

分类:技术文章

本文共 3685 字,大约阅读时间需要 12 分钟。

将Scrapy置于Django项目内部,使用Django的ORM来处理Scrapy的Item

1、更改scrapy中settings.py,将爬虫的环境设置为django的环境,导入django的环境:

import osimport djangoos.environ.setdefault('DJANGO_SETTINGS_MODULE', 'Soufan_crawl.settings') # 导入django的环境,第二个参数是django项目名.settingsdjango.setup() # 启用Django环境 2、更改scrapy中pipelines.py:

新建pipeline:

from apps.spider_manage.models import Dataclass DjangoORMPipeline(object): def process_item(self, item, spider): for data in item['data']: title = data['title'] number = data['number'] Data.objects.create(title=title, number=number) return item # 下一个pipline接着处理

settings.py中注册:

ITEM_PIPELINES = { 'data_acquisition.pipelines.DjangoORMPipeline': 2,} 补充:Data

class Data(models.Model): title = models.CharField(max_length=128) number = models.IntegerField() create_time = models.DateTimeField(default=now)

以上做法虽能给数据的保存带来一点方便,但事实上造成了一些弊端,scrapy的正常调试失效,比如:

(Py3_spider) Soufan_crawl\data_acquisition> scrapyTraceback (most recent call last): File "c:\users\onefine\appdata\local\programs\python\python37\Lib\runpy.py", line 193, in _run_module_as_main "__main__", mod_spec) File "c:\users\onefine\appdata\local\programs\python\python37\Lib\runpy.py", line 85, in _run_code exec(code, run_globals) File "C:\Users\ONEFINE\Envs\Py3_spider\Scripts\scrapy.exe\__main__.py", line 9, inFile "c:\users\onefine\envs\py3_spider\lib\site-packages\scrapy\cmdline.py", line 110, in execute settings = get_project_settings() File "c:\users\onefine\envs\py3_spider\lib\site-packages\scrapy\utils\project.py", line 68, in get_project_settings settings.setmodule(settings_module_path, priority='project') File "c:\users\onefine\envs\py3_spider\lib\site-packages\scrapy\settings\__init__.py", line 292, in setmodule module = import_module(module) File "c:\users\onefine\envs\py3_spider\lib\importlib\__init__.py", line 127, in import_module return _bootstrap._gcd_import(name[level:], package, level) File " ", line 1006, in _gcd_import File " ", line 983, in _find_and_load File " ", line 967, in _find_and_load_unlocked File " ", line 677, in _load_unlocked File " ", line 728, in exec_module File " ", line 219, in _call_with_frames_removed File "D:\PythonProject\DjangoProject\Soufan_crawl\data_acquisition\data_acquisition\settings.py", line 15, in django.setup() # 启用Django环境 File "c:\users\onefine\envs\py3_spider\lib\site-packages\django\__init__.py", line 19, in setup configure_logging(settings.LOGGING_CONFIG, settings.LOGGING) File "c:\users\onefine\envs\py3_spider\lib\site-packages\django\conf\__init__.py", line 57, in __getattr__ self._setup(name) File "c:\users\onefine\envs\py3_spider\lib\site-packages\django\conf\__init__.py", line 44, in _setup self._wrapped = Settings(settings_module) File "c:\users\onefine\envs\py3_spider\lib\site-packages\django\conf\__init__.py", line 107, in __init__ mod = importlib.import_module(self.SETTINGS_MODULE) File "c:\users\onefine\envs\py3_spider\lib\importlib\__init__.py", line 127, in import_module return _bootstrap._gcd_import(name[level:], package, level) File " ", line 1006, in _gcd_import File " ", line 983, in _find_and_load File " ", line 953, in _find_and_load_unlocked File " ", line 219, in _call_with_frames_removed File " ", line 1006, in _gcd_import File " ", line 983, in _find_and_load File " ", line 965, in _find_and_load_unlockedModuleNotFoundError: No module named 'Soufan_crawl'(Py3_spider) Soufan_crawl\data_acquisition>

所以不推荐这种做法,若要使用Django的ORM,请直接使用scrapy-djangoitem,如下。

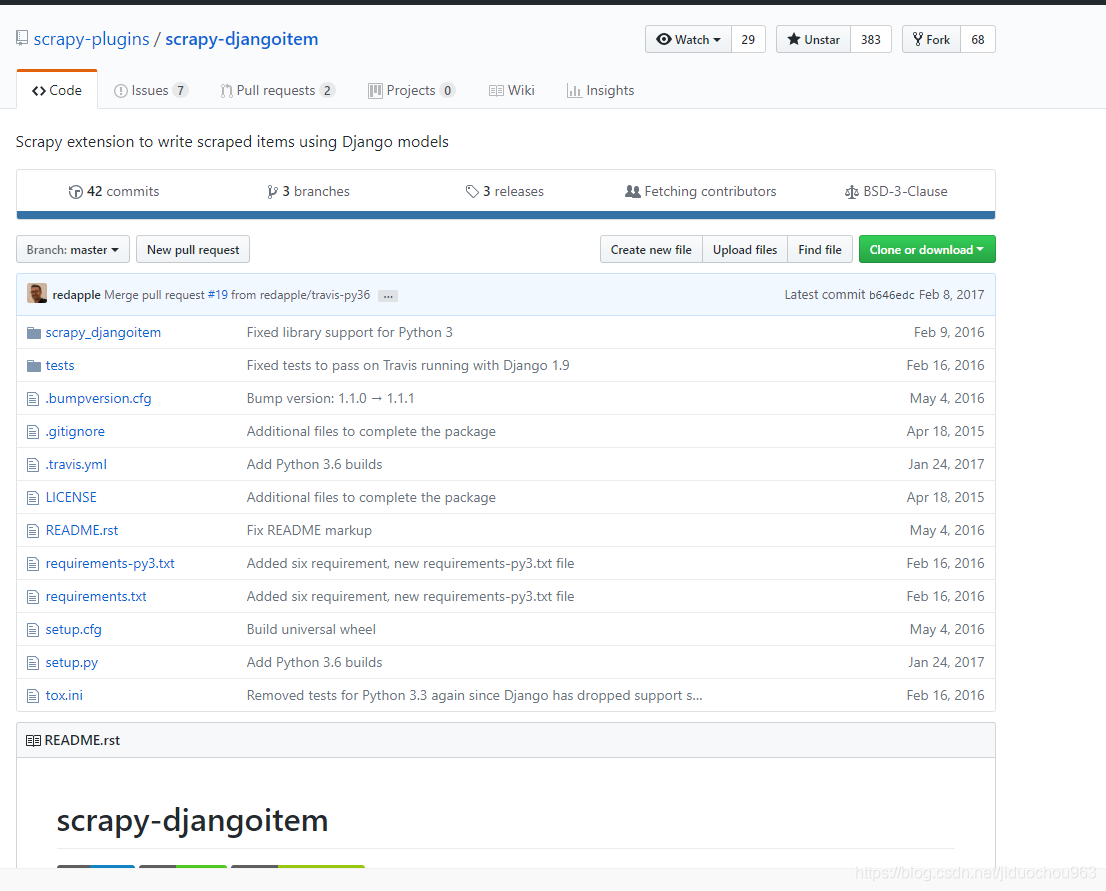

scrapy-djangoitem使用

由于github上介绍的够详细,所以直接给出github地址。

附:

GitHub地址

转载地址:https://onefine.blog.csdn.net/article/details/86849865 如侵犯您的版权,请留言回复原文章的地址,我们会给您删除此文章,给您带来不便请您谅解!

发表评论

最新留言

能坚持,总会有不一样的收获!

[***.219.124.196]2024年04月09日 23时28分43秒

关于作者

喝酒易醉,品茶养心,人生如梦,品茶悟道,何以解忧?唯有杜康!

-- 愿君每日到此一游!

推荐文章

squid负载

2019-05-05

tomcat部署jenkins

2019-05-05

Prometheus 监控Mysql服务器和Grafana可视化

2019-05-05

Grafana数据迁移

2019-05-05

Linux下防止rm -rf /命令误删除

2019-05-05

cerebro部署

2019-05-05

误删除系统libselinux.so.1之后

2019-05-05

C++类默认提供的函数

2019-05-05

Ubuntu安装fcitx后,“语言支持”不见了

2019-05-05

Ubuntu权限分配(chmod命令)

2019-05-05

(12) Hadoop 升级技能

2019-05-06

互联网金融风控模型

2019-05-06

(总结)Nginx配置文件nginx.conf中文详解

2019-05-06

wget 下载

2019-05-06

如何在mac上用apache ab进行web测试

2019-05-06

在tomcat7中启用HTTPS的详细配置

2019-05-06

nginx使用ssl模块配置HTTPS支持

2019-05-06

tomcat------https单向认证和双向认证

2019-05-06

HTTPS单向认证和双向认证

2019-05-06

HTTPS的七个误解

2019-05-06